Last week I reported on how I set up a in-memory DB2 database on IBM Bluemix and loaded some historic weather data. Over the last couple days I used some spare time to play with the Cloud-based analytic capabilities that are provided as part of the

Softlayer/Bluemix/DB2 combination. Most of the time went into learning (some basics of)

R, an environment for statistical computing and graphics. As an example I wanted to find out what temperatures to expect for a possible September vacation on the German island of

Norderney.

[Update 2014-11-04: The Analytics Warehouse service on Bluemix is now called

dashDB]

For my small exercise I used data provided by the

German Meteorological Service "Deutscher Wetterdienst". It allows to freely download and use (under some conditions) data from several decades of weather observation.

I uploaded the data to DB2/Bluemix as described in my previous post.

|

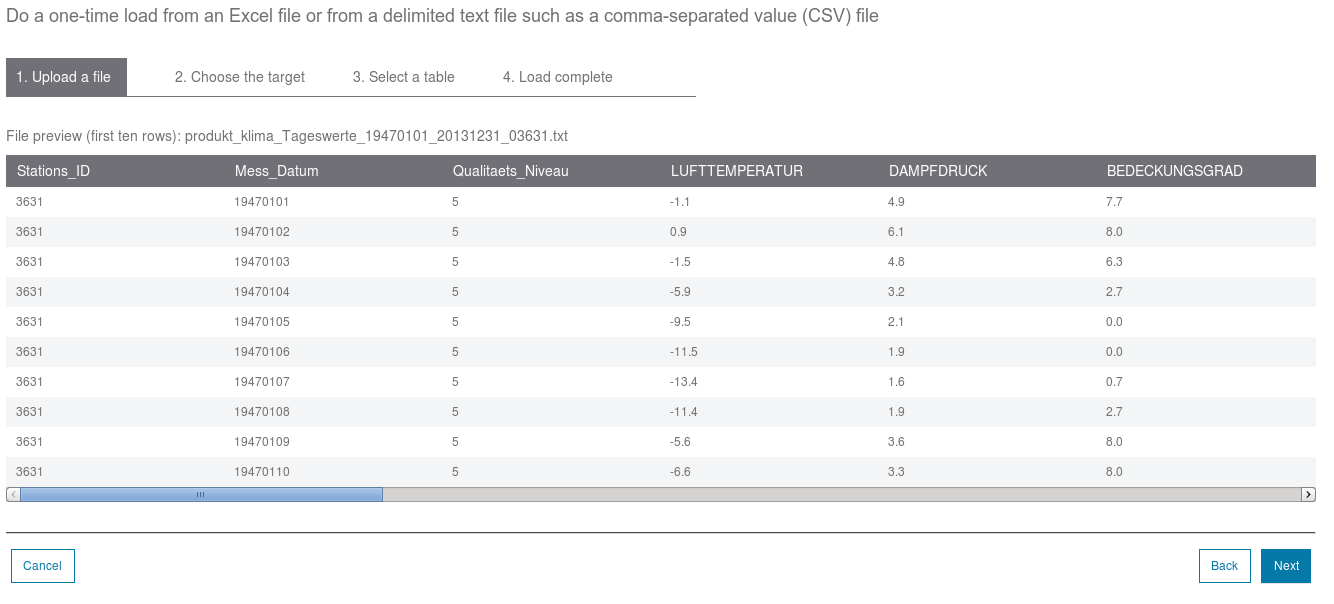

| Bluemix: Change DB2 column name and type |

While playing with the data I noticed that the column names required escaping of quotes and the observation dates were stored as integer values (yyyymmdd). In a second upload I simplified the column names and adapted the column data type using the DB2 load wizard (see picture). Thereafter I was set for my experiments with

R.

The DB2 Cloud environment provides several examples for programming in R, a special function library "bluR" to easily connect R with DB2-based data, and it features the

RStudio to develop, test, and execute code in R. Within RStudio it is possible to execute several demos to learn more about analytics, graphing, and data processing. For the DB2 in-memory database API for R there is a demo as well. You can invoke it using the "demo(blur)" command:

|

| DB2 API demo for R in RStudio |

The demo shows how to connect to DB2, execute a query and use the fetched data for analytic processing in R. Last week I already tweeted about how I tested escaping of quote characters (use C style, not SQL style):

The data set which I uploaded to DB2 has daily minimum and maximum temperatures (and lots of other meteorological) for about 70 years. I used a SQL query and then the ggplot2 library to create a graphic. It shows the band for the minimum temperatures for each September day as well as the band for the maximum daily temperatures.

|

| DB2 with R: Historic September temperatures |

The code for this graphic is pretty simple (and I started last week looking at R and DB2) and

available from my Github account:

1: ########### R script to analyze historic weather data for min/max values

2: ## Written by Henrik Loeser

3: ## Connection handle con to BLU for Cloud data warehouse is provided already

4: ## For plotting, we are using ggplot2 package

5: ##

6: library(ggplot2)

7: library(bluR)

8:

9: ## initialize DB2 connection and environment

10: con <- bluConnect("BLUDB","","")

11: bluAnalyticsInit(con)

12:

13: ## query DB2 weather data and fetch min/max values of min/max values

14: ## (lower/upper boundary each)

15: query<-paste('select max(lufttemperatur_maximum) as maxmax,min(lufttemperatur_minimum) as minmin,min(lufttemperatur_maximum) as minmax,max(lufttemperatur_minimum) as maxmin,tag from (select lufttemperatur_maximum, lufttemperatur_minimum, day(mdatum) as tag from blu01023.klima where month(mdatum)=9) group by tag order by tag asc')

16: df <- bluQuery(query,as.is=F)

17:

18: ## Some plotting needs to be done

19: jpeg(type='cairo',"tempe.jpg",width=800,height=600)

20: ggplot(df, aes(x = TAG))+ylab("Temperature")+xlab("Day")+

21: geom_ribbon(aes(ymin = MINMIN, ymax=MAXMIN), fill='blue')+

22: geom_ribbon(aes(ymin = MAXMAX, ymax=MINMAX), fill='red')+

23: geom_ribbon(aes(ymin = MAXMIN, ymax=MINMAX), fill='white')+

24: geom_line(aes(y = MINMIN), colour = 'black') +

25: geom_line(aes(y = MAXMIN), colour = 'black') +

26: geom_line(aes(y = MINMAX), colour = 'black') +

27: geom_line(aes(y = MAXMAX), colour = 'black')

28:

29: sink('/dev/null')

30:

31: bluClose(con)

32: ## connection is closed, we are done

Pretty cool (my opinion)! I am already turning into a data scientist. And you can test this yourself on

IBM Bluemix with the Analytics Warehouse service (

DB2 in-memory database feature).