This repo provides the PyTorch implementation of the work. Some code comments still need to be organized

- Ubuntu 16.04/18.04, CUDA 10.1/10.2, python >= 3.6, PyTorch >= 1.6, torchvision

- Install

detectron2from source sh scripts/install_deps.sh- Compile the cpp extension for

farthest points sampling (fps):sh core/csrc/compile.sh

Download the 6D pose datasets (LM, LM-O, YCB-V) from the

BOP website and

VOC 2012

for background images.

Please also download the image_sets and test_bboxes from

here (BaiduNetDisk, OneDrive, password: qjfk).

The structure of datasets folder should look like below:

# recommend using soft links (ln -sf)

datasets/

├── BOP_DATASETS

├──lm

├──lmo

├──ycbv

├── lm_imgn # the OpenGL rendered images for LM, 1k/obj

├── lm_renders_blender # the Blender rendered images for LM, 10k/obj (pvnet-rendering)

├── VOCdevkit

-

lm_imgncomes from DeepIM, which can be downloaded here (BaiduNetDisk, OneDrive, password: vr0i). -

lm_renders_blendercomes from pvnet-rendering, note that we do not need the fused data.

./core/gdrn_modeling/train_gdrn.sh <config_path> <gpu_ids> (other args)

Example:

./core/gdrn_modeling/train_gdrn.sh configs/gdrn/lm/a6_cPnP_lm13.py 0 # multiple gpus: 0,1,2,3

# add --resume if you want to resume from an interrupted experiment.

Our trained NMPose models can be found here (BaiduNetDisk,password:flx3).

./core/gdrn_modeling/test_gdrn.sh <config_path> <gpu_ids> <ckpt_path> (other args)

Example:

./core/gdrn_modeling/test_gdrn.sh configs/gdrn/lmo/a6_cPnP_AugAAETrunc_BG0.5_lmo_real_pbr0.1_40e.py 0 output/gdrn/lmo/a6_cPnP_AugAAETrunc_BG0.5_lmo_real_pbr0.1_40e/gdrn_lmo_real_pbr.pth

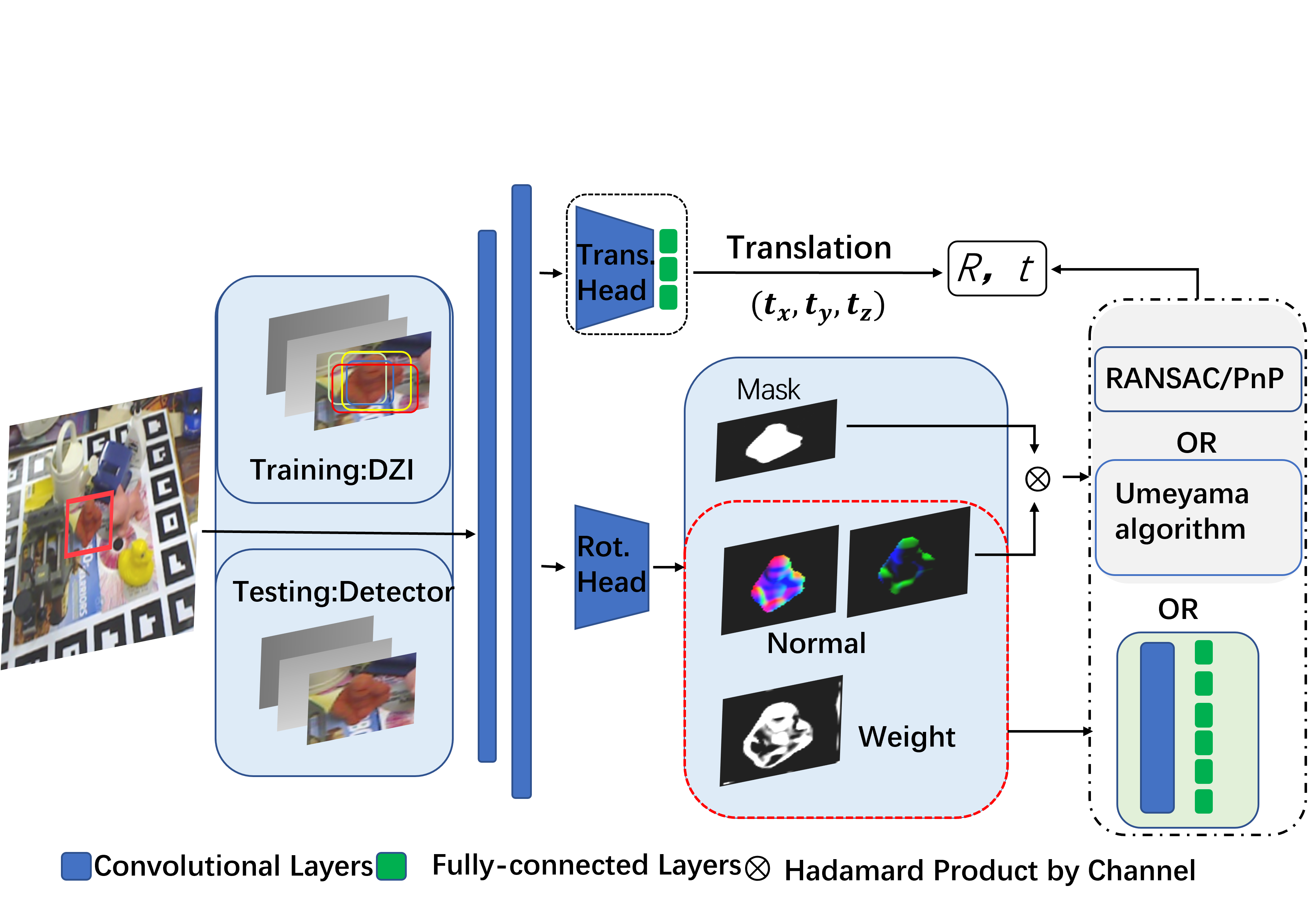

This work can not be finished well without the following reference, many thanks for the author's contribution: CDPN, GDR-Net