An implementation for Vanilla GAN to generate different 5 gaussian distributions

Only four distributions have been learned by GAN not all of them. This failure called Mode Collapse. Unlike WGAN which doesn't collapse when the training data contains many distributions. See WGAN repository

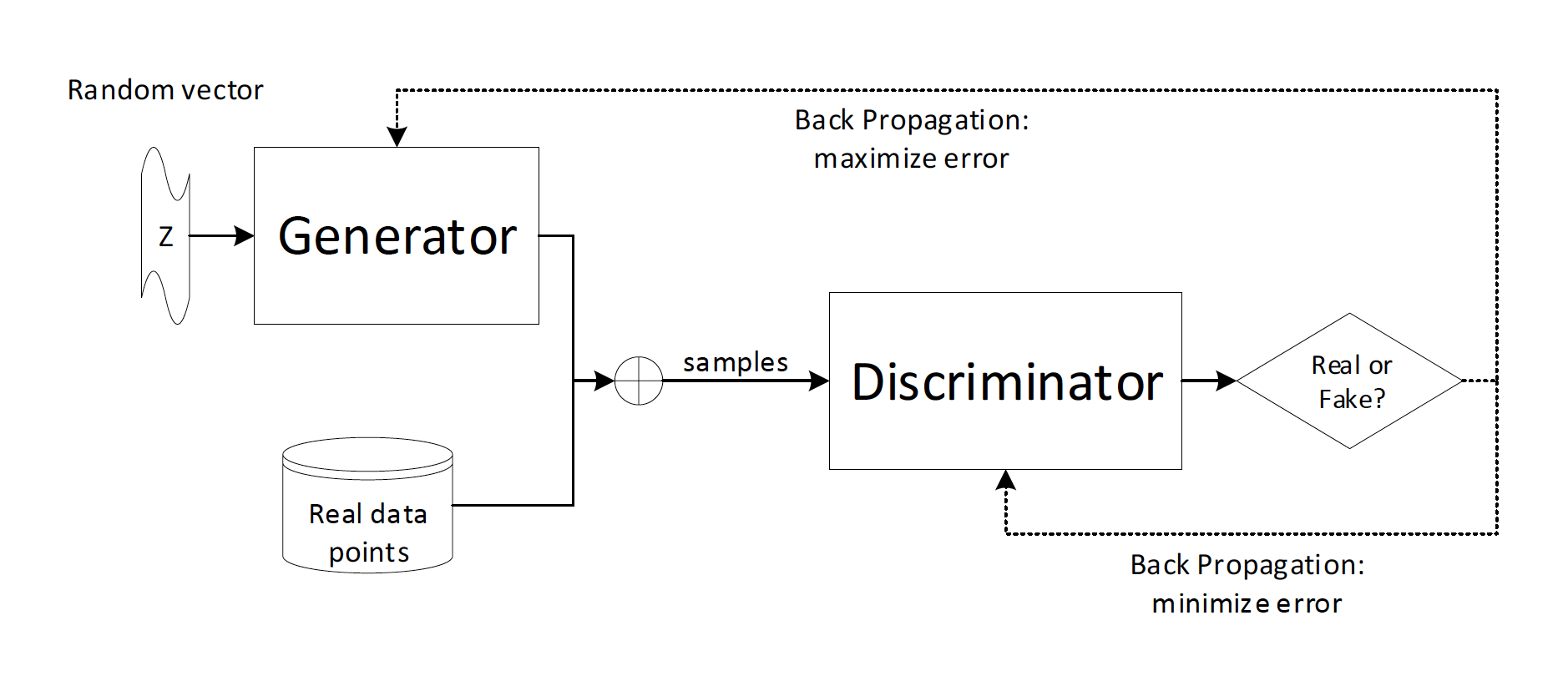

- GAN Architecture

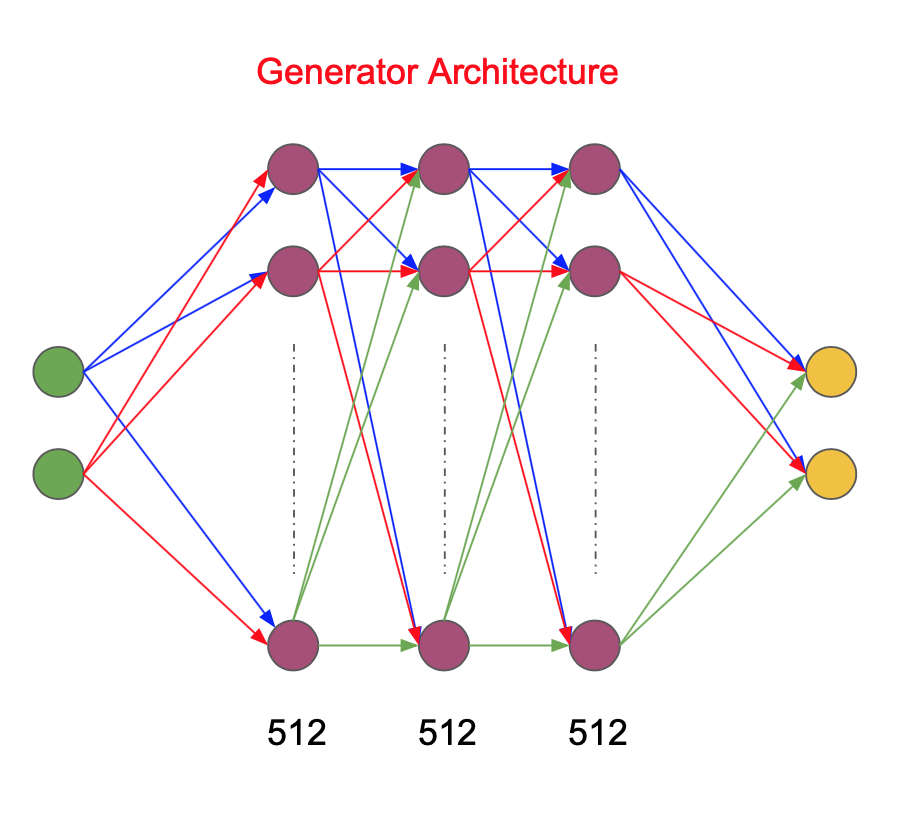

- Generator Architecture

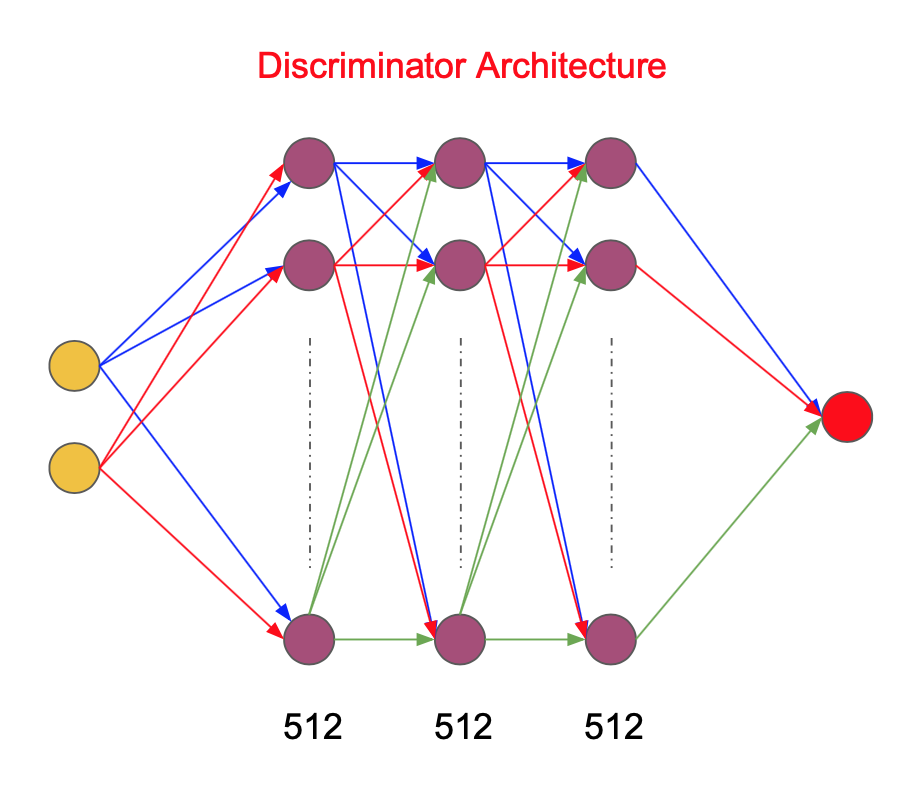

- Discriminator Architecture

- How to train the model

GAN consists of a generative model called Generator and a discriminative model called Discriminator

The Generator takes a random vector Z as input to output new data. The Discriminator tries to distinguish between the generated data by the generator and the real data Our goal is to train the Generator to generate fake data looks like the real data until the discriminator will not be able to distinguish between the real data and the fake data Both of them try to Faul the other like Minmax game

G tries to maximize the probabilty of the fake data D tries to minimize the probabilty of the fake data and maximize the probabilty of the real dataIt consists of an input layer of 2 neurons for the z vector, 3 hidden layers of 512 neurons and an output layer of 2 neurons activation functions of the 3 hidden layers are Relus and linear for the output layer

it consists of an input layer of 2 neurons for the training data, 3 hidden layers of 512 neurons of Relu activation function and an output layer of 1 neuron of sigmoid activation function

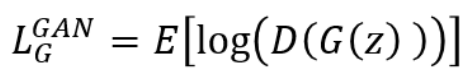

Generator tries to maximize the probability of the generated data

Tensorflow code of the generator loss function:g_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=D_logit_fake, labels=tf.ones_like(D_logit_fake)))

Discriminator tries to minimize the probability of the generated data and to maximize the probability of the real data

Tensorflow code of the discriminator loss function:D_loss_real = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=D_logit_real, labels=tf.ones_like(D_logit_real)))

D_loss_fake = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=D_logit_fake, labels=tf.zeros_like(D_logit_fake)))

d_loss = D_loss_real + D_loss_fake

Write in the console python vanilla_gan.py to train the model for generating 5 Gaussian distributions. The results will be saved for each epoch in the tf_vanilla_gan_results folder