-

Notifications

You must be signed in to change notification settings - Fork 811

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

This process is way too slow #199

Comments

|

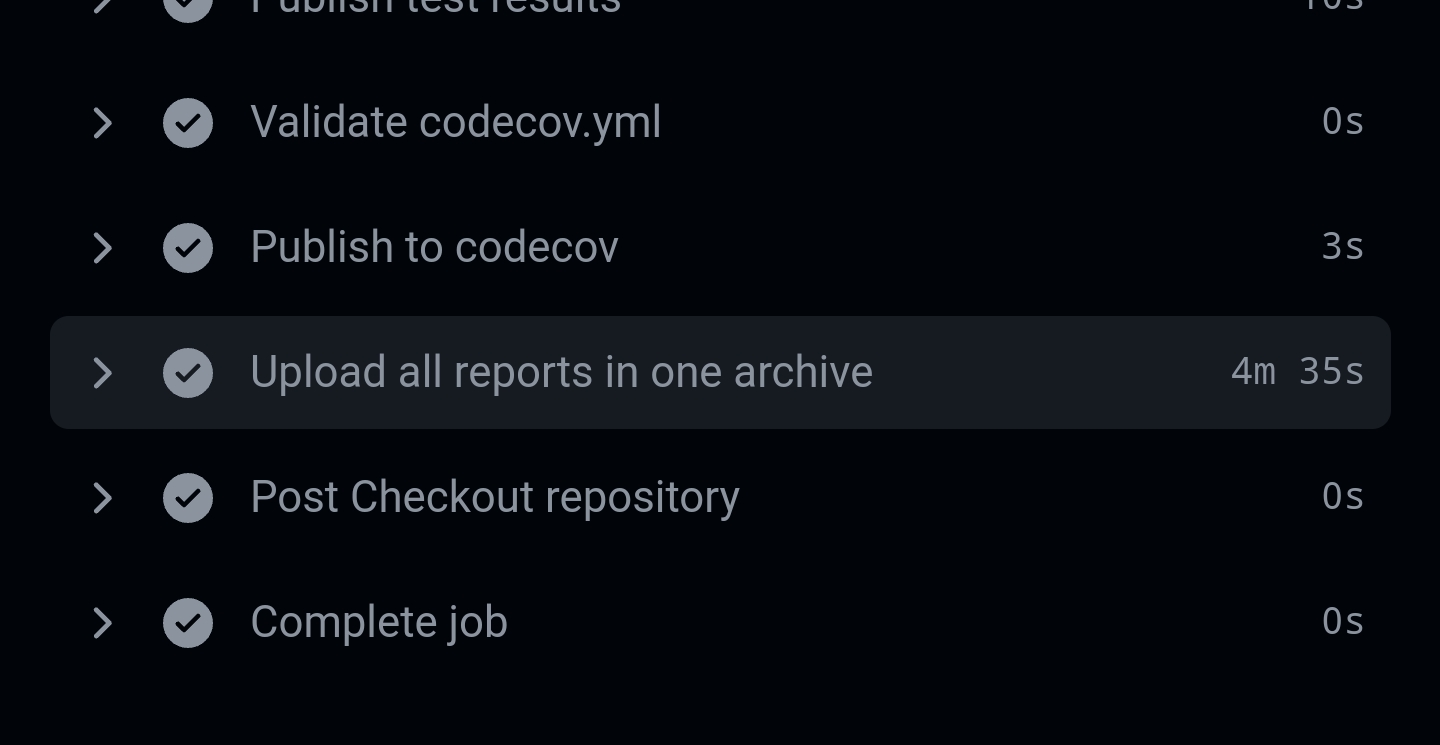

How many files are you uploading? General telemetry indicates that each files takes 100ms to upload with 2 concurrent uploads happening at a time (you can see all this information by turning on step debugging ). Note that uploads from self-hosted runners will be slower. If you're uploading hundreds or thousands of files than you can create a zip yourself beforehand using the steps here and upload that: https://github.com/actions/upload-artifact#too-many-uploads-resulting-in-429-responses. That will significantly reduce the amount of HTTP calls and your upload will be much faster. You will have a zip of a zip when you download the artifact unfortunately though until we add the option to download files individually from the UI |

|

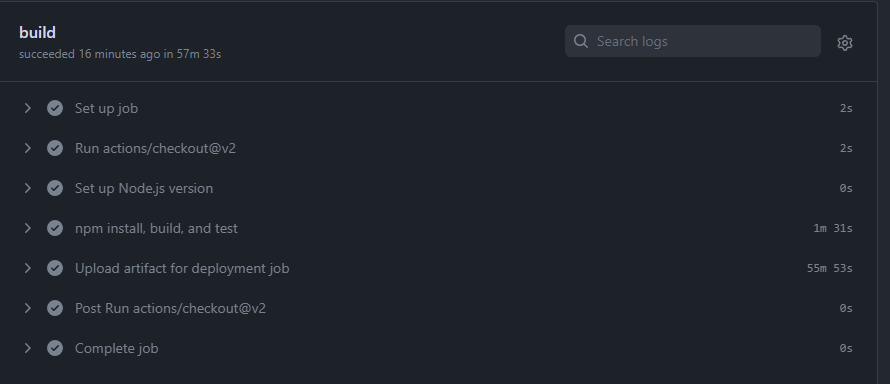

I tried that too. I zipped it up in the build and tried to upload the artifact and that shaved off about 10 minutes of a 150 meg file. So it still took roughly 30 minutes for just the upload/download piece of it. The build, test, zip and subsequent unzip and push to prod only took about 5 minutes of a 35 minute process. For now I just skip the artifact upload/download process and the time to prod is about 5 minutes total. It seems to be consistently slow too as I ran the process numerous times without any real differences to note. |

|

Any update on this? It is painfully slow compared to Azure Pipeline's Pipeline Artifact offering. Uploading 4500~ files / 350~ MB took <30 secs there, while in Github Actions it's taking around 5 minutes |

|

I noticed that actions/cache is nearly an order of magnitude faster than actions/upload-artifact. In my experiments, I found that a single 400 MiB file takes about 150 seconds to upload and 15 seconds to download (with actions/download-artifact). In contrast, putting it into a cache takes 23 seconds, and restoring the cache takes less than 10 seconds. So the reason for the slowness does not seem to be insufficient network bandwidth on the actions runner side. |

|

I have the same issue on large amount of files upload and download are also really slow. |

|

uploading is very different then caching. you might need different artifacts for each build, and caching does not achieve that. Suppose you build docker images - which are unique in each build and need to be shared across other steps, and can not really be cached, unless you hack it: What we have done is something like this: and then later: Notice the |

|

I just attempted to upload a large artifact (7.5G) and discovered just how unusably slow this is. I gave up when I became clear it was going to take around an hour to upload. Yeah, that's not going to work. One thing I notice is that it spends the first 5 minutes "starting upload" whatever that means LogsRunning with debug mode gives us some more insights: Logs

As you can probably tell by the filename, that is not a great idea. I recall reading that the artifact upload did its own gzip compression (although now I can't find where it's mentioned), but when I tried relying on that, the whole thing was even slower. Using parallel zstd is way faster. But that alone cannot explain the time here. As noted above, caching is way faster, so this can't only be from limited network bandwidth on the VM. These artifacts are going straight to Azure, probably even in the same data center! There's really no excuse for this to be slow. By contrast, when running on my self-hosted runners in GCP, the gcloud upload of approximately this same file takes under a minute (it's a 7.5G build archive, so unfortunately it's going to be somewhat slow no matter what). I've traced through the code and whatever it's doing in this while loop is the source of the slowness: https://github.com/actions/toolkit/blob/819157bf872a49cfcc085190da73894e7091c83c/packages/artifact/src/internal/upload-http-client.ts#L321. It's uploading each chunk serially, which probably doesn't help, but gcloud does the same thing with parallel composite upload turned off and does it in the aforementioned minute, so I don't think that can be it. It's recomputing the HTTP headers for each chunk and creating a new file stream. Maybe the underlying client has connection pooling, so the former isn't an issue? I don't see it in here: https://github.com/actions/toolkit/blob/main/packages/http-client/src/index.ts#L328, but I'm no typescript or http request expert. Hard to tell without profiling exactly what the cause is, but profiling does seem to be in order. |

|

Is there any update on this? I have a case where I run tests on several different configurations. The upload artifacts step takes several minutes as a result. If I upload them all in a single tarball, this is not an issue and the process takes a matter of seconds, but this results in a tarball within a zip folder once the pipeline completes which is far from ideal. I wonder whether it would make sense for GitHub to pack these into a single tar ball internally to upload and then unpack them serverside afterwards..? Either that or run more uploads in parallel to avoid this delay? Ideally, uploading one 40MiB file shouldn't take exponentially less time than uploading the same data in 4000 10KiB files, right? They end up in the same zip file once the job has completed. |

|

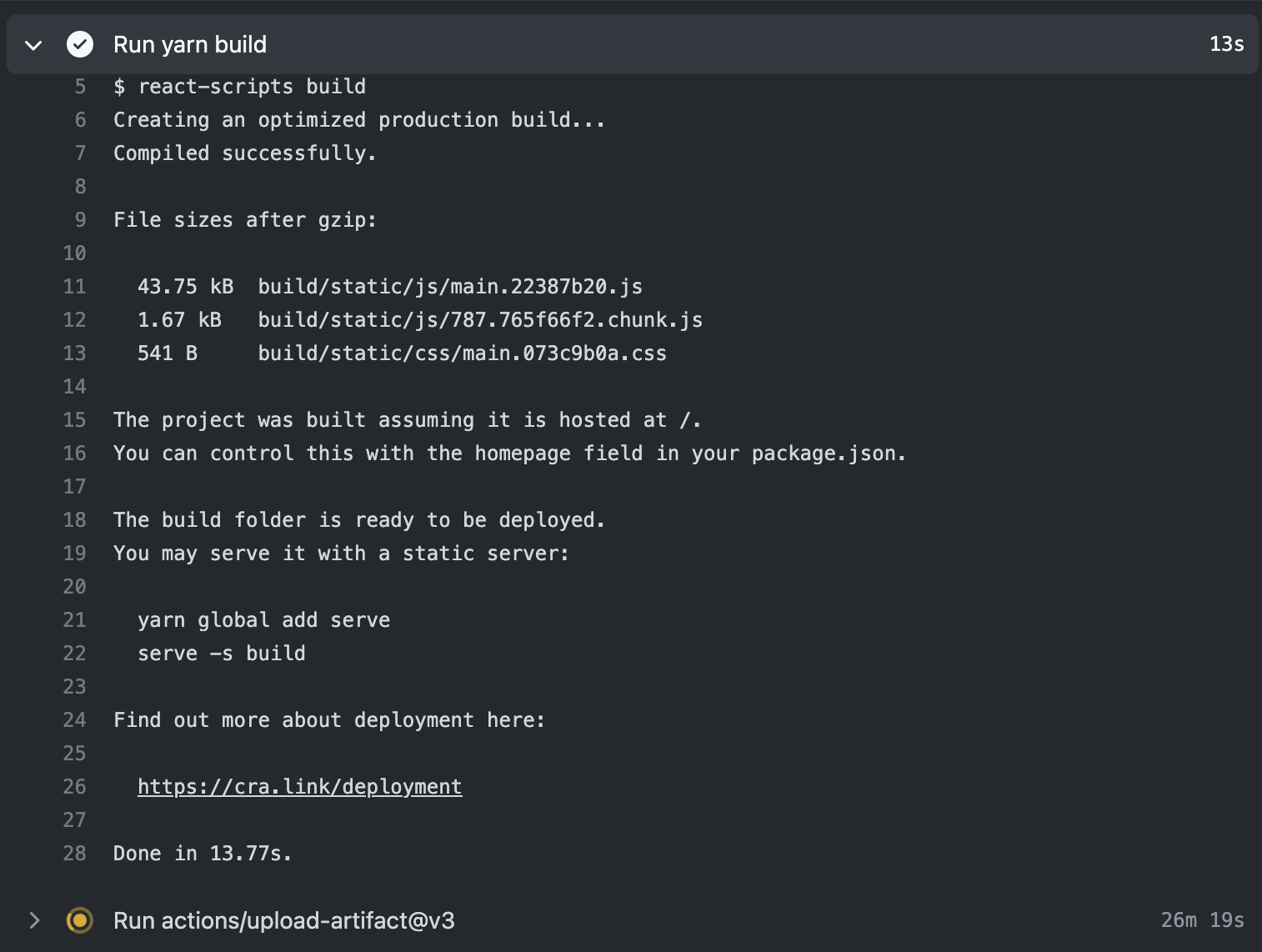

I have the same problem with a project of 23MB it takes ages (almost 1h) as mentioned here https://azureossd.github.io/2022/02/07/React-Deployment-on-App-Service-Linux/

A solution is mentioned in the article |

Reduced my deploy time from 2,5 hours to 15 minutes, still not good, but a lot better. |

|

Also seeing really slow upload speed on GHE: we have a ~135MB tar.xz file that takes ~2m30 to upload. Downloading in a later stage takes 11d. Is there some on-the-fly-ZIPfiling we can disable? I don't need my file to be wrapped in a ZIP, and I suspect the attempts to further compress my tarball are what's taking the time. |

|

Can anyone recommend an action that stores things on S3-compatible storage instead? This is beyond a joke, of my build, I spend nearly 40% of the time (~2m30) waiting for an upload. |

|

I have a gut feeling that this issue might have been addressed in actions/toolkit#1488, so upload speeds might improve whenever actions/upload-artifact@v4 gets released? |

|

From that issue:

So yes! This will be fixed in upload-artifact@v4 💚 |

|

I see there is an My understanding is that this environment variable is supposed to be provided by the runner automatically. |

|

@tech234a we can only wait... they already implemented the fix just this actions needs to be updated with the new way. |

|

With v4... From 20 minutes to upload and download 5 gb of artifact to 1.30-2 minutes... Feel good! Thanks a lot for v4. |

|

Up- and download striped down from 5 minutes to 4 – 12 seconds (~2300 files, 100mb). |

|

I'm noticing timeout and internal error once in a while but I will keep it monitored... Maybe an idea is to introduce some kind of retry? |

|

From one minute to 3-7 seconds. Thank you! |

|

The v4 improved the perfermance quite a lot, for our workflow from 10min -> 20s! |

|

As some have already noticed, v4 is officially out! 😃 https://github.blog/changelog/2023-12-14-github-actions-artifacts-v4-is-now-generally-available/ Recommend switching over as one of the big improvements we focused on was speed.

From our testing we've seen upload speed increase by up to 98% in worst case scenarios. We'll have a blog post sometime in January with more details + some data. Here is a random example from one of our runs comparing v3 to v4 https://gh.io/artifact-v3-vs-v4 If there are any issue likes timeouts or upload failures then please upon up new issues (there are already a few related to v4) so we can investigate them separately. Closing out! |

|

@konradpabjan Any idea when this will come to GHES please? This current client is uploading large artefacts using GHES and v3 is really slow. |

It seems it is in the roadmap for oct-dec this year github/roadmap#930 |

|

@jommeke22f are you using v4? |

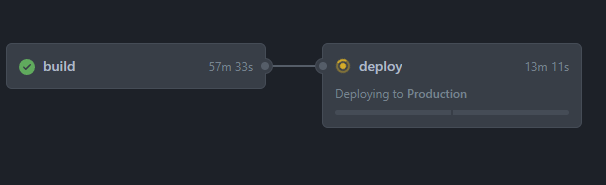

5 minutes to fix bug, 40 minutes to upload/download artifact. Are there any plans in the works to make this more performant?

The text was updated successfully, but these errors were encountered: